How Effective is History-based optimisations on BigQuery

Explore performance improvements based on common query patterns

History-based optimisations in BigQuery leverage insights from previously executed similar queries to introduce additional enhancements, aiming to improve performance metrics such as slot usage and query latency.

Google states that history-based optimisations can provide significant advantages, with slot time savings of up to 67%. This results in faster query execution for on-demand workloads and cost reductions for users with reserved slots—particularly relevant now that autoscaling has become the standard practice.

My objective is to test this feature with some common queries that many of us run daily, particularly in scheduled jobs. What interests me the most is not just the performance improvements for repeated queries, but the potential benefits claimed for similar queries.

If you prefer to watch a video about this instead, I’ve created one too :)

Enable history-based optimisations

History-based optimisation for BigQuery is now generally available (GA). According to the documentation, it is expected to be automatically rolled out and enabled for all projects in the future. However, for now, you need to enable it manually using the SQL command below.

Effect on Full Refresh Models

Many data engineers rely on truncate-and-insert jobs for datasets that aren’t particularly large and are updated infrequently, such as daily. This approach simplifies workflows by avoiding the complexity of managing delta tracking.

However, as the data size grows over time, costs can increase. For this type of workload, which typically runs daily, a new partition is added with additional data each time. Let’s explore the performance impact of enabling history-based optimisation for such a workload.

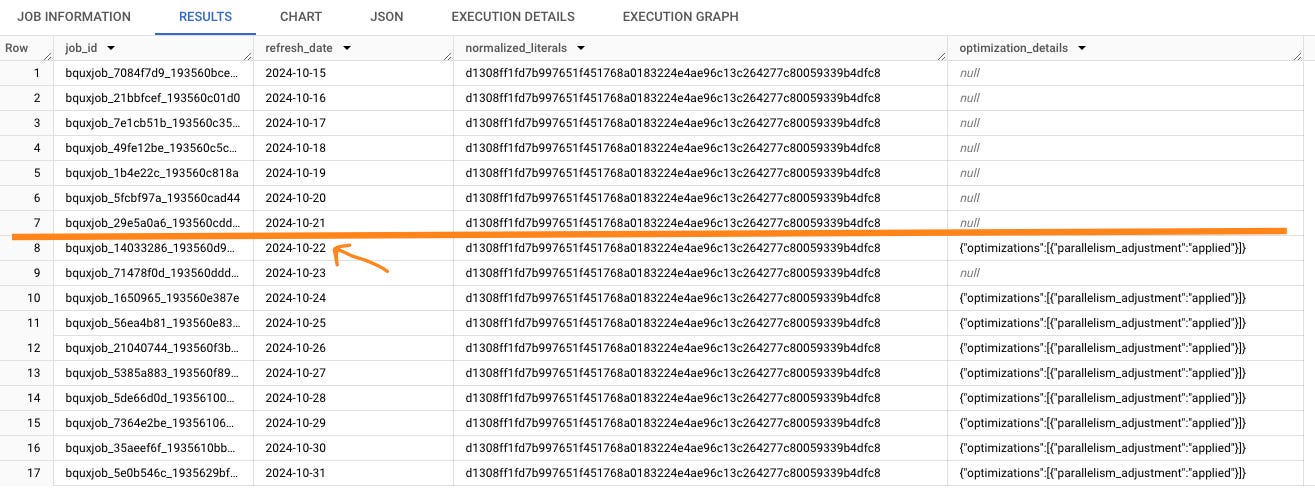

For this test, I used a moderately sized time-series dataset partitioned by refresh_date. My objective is to evaluate the impact of the optimisation by performing a full rebuild of the data, incrementally adding one extra day per query to simulate a real-world scenario.

My expectation is that while the optimisation may not be applied immediately, repeated execution of similar queries should allow the optimiser to recognise the pattern and begin applying optimisations after a few runs.

The results are quite interesting. One optimisation, parallelism_adjustment, is being applied, and it first shows up on the 22nd. I think there are two reasons behind this behaviour.

There wasn’t much data in the table until the 21st—only about 130MB in total, including all historical data up to the 20th. Starting on the 21st, around 400MB of data is added each day.

It seems that history-based optimisation requires at least one previously executed query of a similar size to be able to apply optimisations, which explains why there’s no optimisation applied on the 21st.

This shows that if there’s very little data, optimisation won’t happen, which makes perfect sense. However, the amazing part is that it only takes one similar query from the past for the optimiser to start improving the performance of new queries. But what’s the real impact of this optimisation?

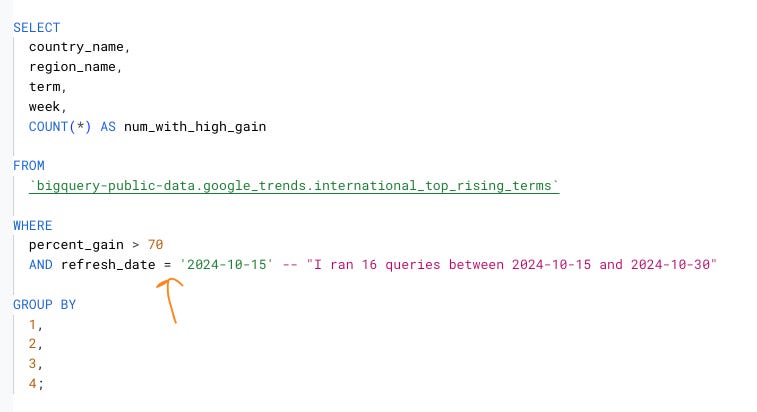

Google Cloud provides an example query that demonstrates the use of normalized_literals to measure the impact on performance, specifically the time (in milliseconds) it takes to run a query before and after optimisation.

It’s similar to a query hash for queries that are nearly identical except for differences in filters, like dates. For example, < '2024-10-15' would share the same query hash as < '2024-10-16'.

The results shown here aren’t particularly useful for the comparison I was trying to make. Starting from the 21st, each day I add another 400MB of data. Even though the query hash matches for queries with just one extra day of data, the additional compute time makes a significant difference. This creates an unfair comparison, where it might look like performance is worse after optimisation is applied, even though that’s not the case.

To address this, I turned off history-based optimisation and reran the queries. This allowed me to make a more accurate comparison by using a different query and leveraging the all_until_date field as the join key to evaluate performance before and after optimisation.

As you can see, every query that has been optimised now shows performance improvements, with some improving by as much as 25%.

This demonstrates that history-based optimisation can have a significant impact on the full refresh query pattern.

On-demand Billing: If you’re using on-demand billing, your queries could finish up to 25% faster without any additional cost.

Slot Users: If you’re using slots, faster query execution means reduced costs. With autoscaling now the default, slot utilisation could improve by an average of ~12%, translating to a 12% cost reduction.

It’s worth noting that these results are based on relatively simple queries. Introducing more complex queries involving joins, window functions, or subqueries could yield even greater performance gains.

Effect on Incremental Models

If you’re working with much larger datasets or need your data to be updated more frequently, you’d likely use an incremental approach to update your models, relying on some form of delta tracking.

For example, with the international_top_rising_terms dataset, performing a full refresh each day could be inefficient with large amounts of data. Instead, you’d process just a single partition—such as one day’s data, based on refresh_date.

Since we already know that optimisation won’t be applied when processing very small amounts of data, my expectation is this: for the first query (e.g., processing data for just one day, like the 21st), the query won’t be optimised. But as I start running queries for the 22nd and beyond, I anticipate that nearly every subsequent query will benefit from optimisation.

The results are exactly as expected (by using this query): no optimisations were applied for the smaller data partitions. However, starting from the 22nd, which leveraged the history from the 21st, every subsequent query was successfully optimised.

Now, let’s examine the impact. While the results aren’t entirely consistent, we’re seeing improvements of up to around 17% for some queries. This translates to:

On-demand: Up to 17% faster query execution times.

Slots: Approximately 10% cost savings.

Summary

It’s great to see that history-based optimisation in BigQuery can reduce compute costs even for similar queries. This is a significant benefit for all BigQuery users. Imagine gaining a 25% improvement in query runtime or 10-12% cost savings (if you’re using slots) without having to do anything. And these results are based on my limited testing with simple queries. For more complex queries that process larger datasets and take longer to run, the potential gains could be even more substantial.

What’s really impressive is how Google is rolling out such impactful cost-saving features and passing the benefits directly to customers instead of keeping them internally. Personally, this is one of the things I appreciate most about Google Cloud—brilliant engineers solving tough problems and delivering meaningful improvements to customers, requiring little to no effort to adopt.

I’m excited to hear about the results you’ll achieve with history-based optimisation in your Google Cloud environment. I strongly believe this feature has the potential to make a big difference in your FinOps initiatives.

Need to modernise your data stack? I specialise in Google Cloud solutions, including migrating your analytics workloads into BigQuery, optimising performance, and tailoring solutions to fit your business needs. With deep expertise in the Google Cloud ecosystem, I’ll help you unlock the full potential of your data. Curious about my work? Check out My Work to see the impact I’ve made. Let’s chat! Book a call at Calendly or email richardhe@fundamenta.co. 🚀📊